6.3 Section 2

Open the file Drivers.sav:

# Load the data and put them in the object called "data"

data <- read.spss("Drivers.sav", to.data.frame = TRUE)6.3.1 Research question 1 (ANOVA): Does talking on the phone interfere with people’s driving skills?

The IV for this reseach question is condition, with conditions:

- hand-held phone

- hands-free phone

- control

The DV is reaction time in milliseconds in a driver simulation test, in variable RT.

6.3.2 Question 2.a

Perform the ANOVA. You can use lm(y ~ -1 + x) to remove the intercept from a regression with dummies, and get a separate mean for each group. The function aov() is an alternate interface for lm() that reports results in a way that matches the conventions for ANOVA analyses more closely.

Click for explanation

You can use summary(lm(y ~ -1 + x)) to get the means for each group:

results <- lm(formula = RT ~ -1 + condition, data = data)

summary(results)##

## Call:

## lm(formula = RT ~ -1 + condition, data = data)

##

## Residuals:

## Min 1Q Median 3Q Max

## -317.50 -71.25 2.97 89.55 243.45

##

## Coefficients:

## Estimate Std. Error t value Pr(>|t|)

## conditionhand-held 654.50 29.08 22.51 <2e-16 ***

## conditionhands-free 617.55 29.08 21.24 <2e-16 ***

## conditioncontrol 553.75 29.08 19.04 <2e-16 ***

## ---

## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

##

## Residual standard error: 130.1 on 57 degrees of freedom

## Multiple R-squared: 0.9586, Adjusted R-squared: 0.9564

## F-statistic: 440 on 3 and 57 DF, p-value: < 2.2e-16And you can use aov() to get the sum of squares for the factor:

results <- aov(formula = RT ~ condition, data = data)

summary(results)## Df Sum Sq Mean Sq F value Pr(>F)

## condition 2 103909 51954 3.072 0.0541 .

## Residuals 57 964082 16914

## ---

## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 16.3.3 Question 2.b

What are the assumptions you need to check?

Click for explanation

We can check several assumptions:

- Presence of outliers

- Normality of residuals

- Homogeneity of residuals

Let’s deal with them in order.

6.3.3.1 Presence of outliers:

In Y-space

We can check the range of the standardized (scale()) residuals for outliers in Y-space. The residuals are inside of the results object, so we can just extract them, standardize them, and get the range:

range(scale(results$residuals))## [1] -2.483778 1.904491What is your conclusiong about the outliers?

6.3.3.2 Normality of residuals

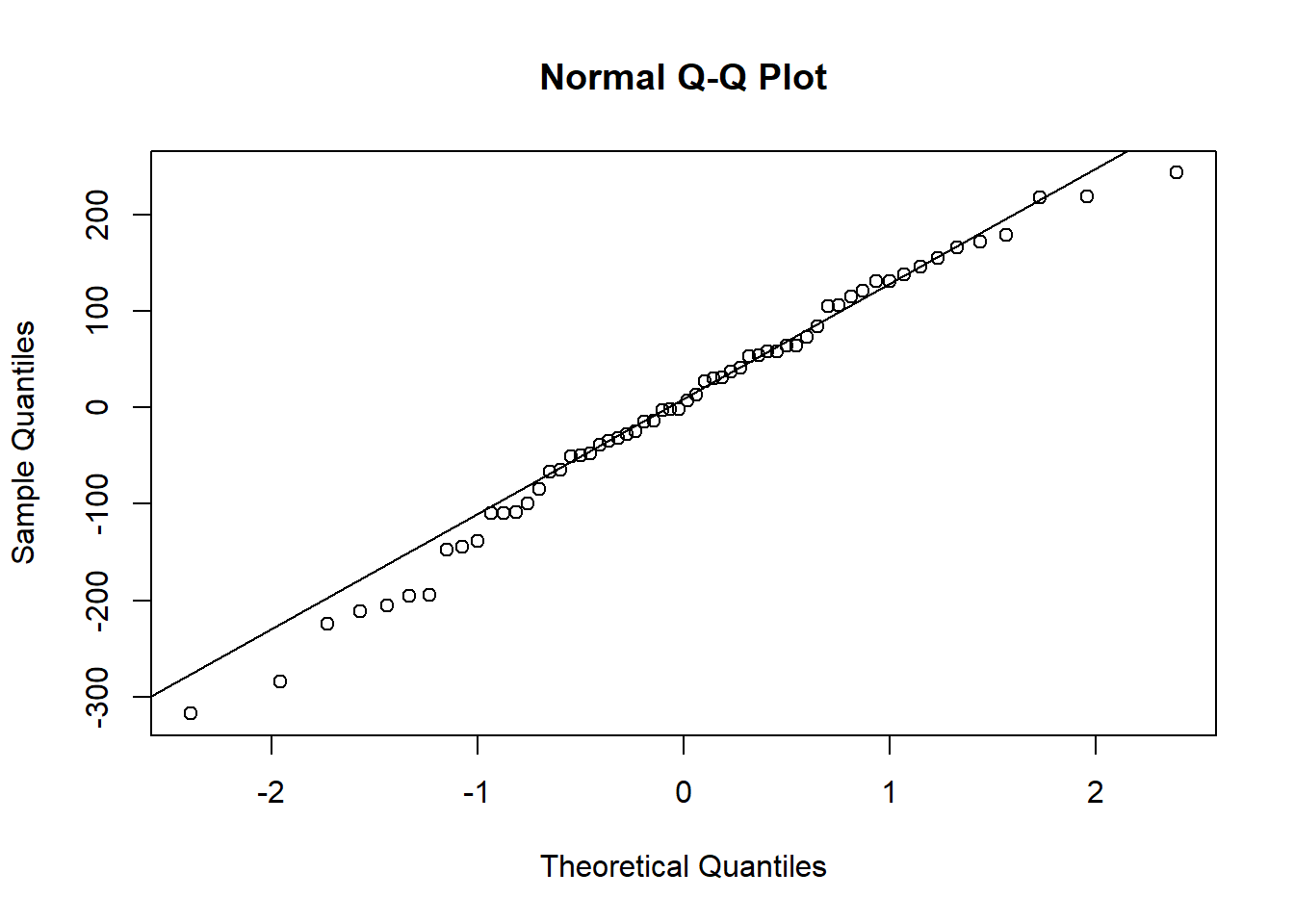

We can check the normality of residuals using a QQplot.

qqnorm(results$residuals)

qqline(results$residuals)

There appears to be some mild deviation from normality at the extremes.

You can also test for normality with the shapiro.test(x) function:

shapiro.test(results$residuals)##

## Shapiro-Wilk normality test

##

## data: results$residuals

## W = 0.98367, p-value = 0.60136.3.3.3 Homogeneity of Variances

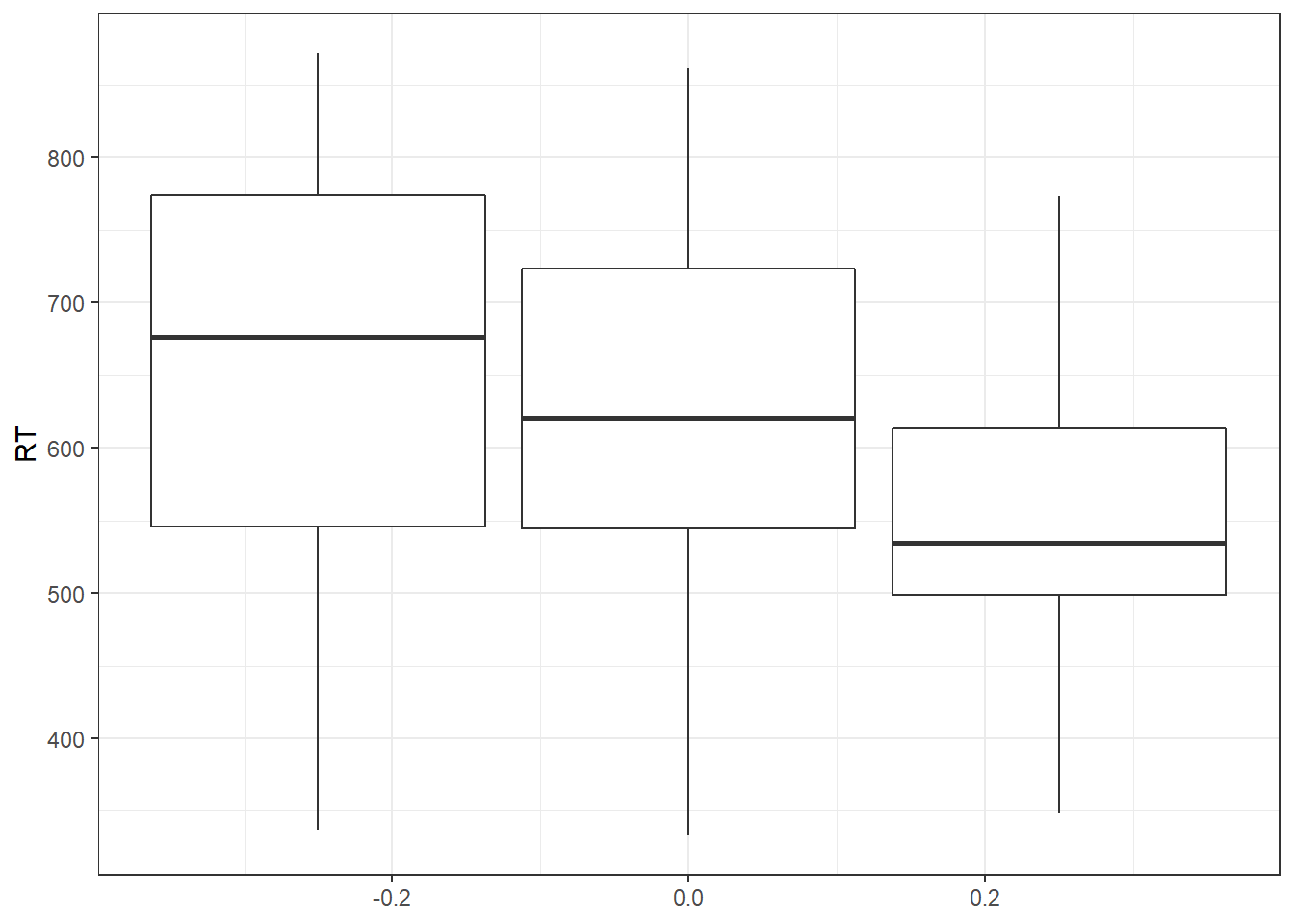

The bartlett.test() function provides a parametric K-sample test of the equality of variances. This test has the same hypotheses as the Levene’s test.

bartlett.test(formula = RT~condition, data = data)##

## Bartlett test of homogeneity of variances

##

## data: RT by condition

## Bartlett's K-squared = 2.7203, df = 2, p-value = 0.2566It can also be nice to use a paneled boxplot to visualize the distributions. For this, we will use ggplot2. This time, we introduce a new command, theme_bw(): A theme for the plot that conforms to APA standards. We can apply this theme to any figure created using ggplot():

library(ggplot2)

ggplot(data, aes(y = RT, group = condition)) +

geom_boxplot() +

theme_bw()

6.3.4 Question 2.c

Explain for each of the assumptions why they are important to check.

6.3.5 Question 2.d

What are your conclusions regarding the assumption checks?

Click for explanation

There are no outliers in X-space, no evidence for (severe) deviations from normality of residuals, and no evidence for (severe) heteroscedasticity.6.3.6 Question 2.e

Answer the research question.

Hint: Use summary() and TukeyHSD().

Click for explanation

We can examine the overall F-test, which is significant:

summary(results)## Df Sum Sq Mean Sq F value Pr(>F)

## condition 2 103909 51954 3.072 0.0541 .

## Residuals 57 964082 16914

## ---

## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1TukeyHSD(results)## Tukey multiple comparisons of means

## 95% family-wise confidence level

##

## Fit: aov(formula = RT ~ condition, data = data)

##

## $condition

## diff lwr upr p adj

## hands-free-hand-held -36.95 -135.917 62.017041 0.6434900

## control-hand-held -100.75 -199.717 -1.782959 0.0451401

## control-hands-free -63.80 -162.767 35.167041 0.2750829Post-hoc tests with Bonferroni correction can be obtained using TukeyHSD(results). We notice that none of these comparisons are significant. However, the research question was Does talking on the phone interfere with peoples driving skills? There are two conditions for talking on the phone. We could thus test a planned contrast of these two conditions against the control condition, instead of all possible post-hoc tests:

The standard contrasts are dummy coded:

contrasts(data$condition)## hands-free control

## hand-held 0 0

## hands-free 1 0

## control 0 1We can replace these with planned contrasts for “phone” vs control, and hand-held vs hands-free:

contrasts(data$condition) <- cbind(phoneVcontrol = c(-1, -1, 2), handVfree = c(-1, 1, 0))

results <- aov(RT ~ condition, data)

# Ask for the lm summary, which gives you t-tests for the planned contrasts:

summary.lm(results)##

## Call:

## aov(formula = RT ~ condition, data = data)

##

## Residuals:

## Min 1Q Median 3Q Max

## -317.50 -71.25 2.98 89.55 243.45

##

## Coefficients:

## Estimate Std. Error t value Pr(>|t|)

## (Intercept) 608.60 16.79 36.248 <2e-16 ***

## conditionphoneVcontrol -27.42 11.87 -2.310 0.0245 *

## conditionhandVfree -18.47 20.56 -0.898 0.3727

## ---

## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

##

## Residual standard error: 130.1 on 57 degrees of freedom

## Multiple R-squared: 0.09729, Adjusted R-squared: 0.06562

## F-statistic: 3.072 on 2 and 57 DF, p-value: 0.054086.3.7 Research question 2 (ANCOVA): Are there differences in reaction time between the conditions when controlling for age?

6.3.8 Question 2.f

What are the assumptions you need to check?

Click for explanation

Assumptions for ANCOVA are the same as for ANOVA (no outliers, normality of residuals, homoscedasticity). ANCOVA has the following additional assumptions:

- Homogeneity of regression slopes for the covariate (no interaction between factor variable and covariate)

- The covariate is independent of the treatment effects. I.e. there is no difference in the covariate between the groups of the independent variable.

6.3.9 Question 2.g

Explain for each of the assumptions why they are important to check.

6.3.10 Question 2.h

Check the assumptions of ANCOVA.

Hint: Within formulas, you can use * instead of + to include interaction effects.

Click for explanation

6.3.10.1 Homogeneity of regression slopes

Add the interaction to the model and test whether the interaction is significant:

results_age <- aov(RT ~ condition + age, data)

results_age_int <- aov(RT ~ condition * age, data)

summary(results_age_int)## Df Sum Sq Mean Sq F value Pr(>F)

## condition 2 103909 51954 4.532 0.0151 *

## age 1 320454 320454 27.955 2.3e-06 ***

## condition:age 2 24622 12311 1.074 0.3488

## Residuals 54 619005 11463

## ---

## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1#Or you could use `anova()` to compare two different models

anova(results_age, results_age_int)What would your conclusion be about this assumption?

Click for explanation

The interaction is NOT significant; no evidence for violation of the assumption.

6.3.10.2 The covariate is independent of the treatment effects

results_indep <- aov(age ~ condition, data)

summary(results_indep)## Df Sum Sq Mean Sq F value Pr(>F)

## condition 2 137 68.55 0.659 0.521

## Residuals 57 5926 103.97What would your conclusion be about this assumption?

Click for explanation

The covariate is not significantly related to treatment effect. The assumption is met.

6.3.11 Question 2.i

Answer the research question. (Do you have to include the interaction or not?)

Click for explanation

results <- aov(formula = RT ~ condition + age, data = data)

TukeyHSD(results)## Tukey multiple comparisons of means

## 95% family-wise confidence level

##

## Fit: aov(formula = RT ~ condition + age, data = data)

##

## $condition

## diff lwr upr p adj

## hands-free-hand-held -36.95 -118.5708 44.67082 0.5242511

## control-hand-held -100.75 -182.3708 -19.12918 0.0119407

## control-hands-free -63.80 -145.4208 17.82082 0.1533777The handheld-condition has a significant higher reaction time than the control condition